- blog

- Disinformation

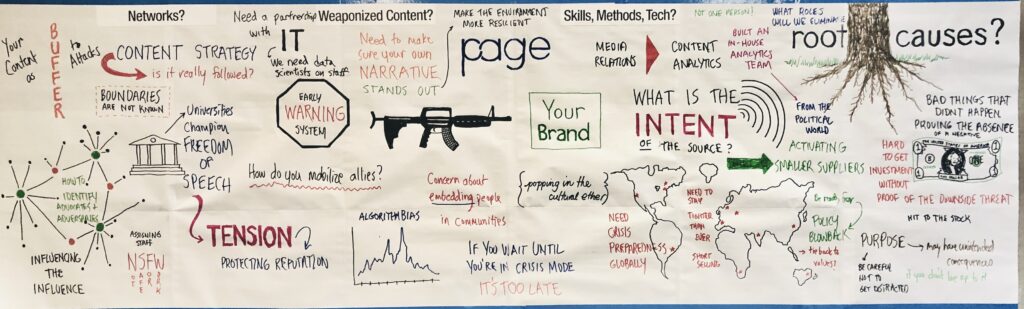

At last week’s #PageSpring Seminar I was joined by Ethan McCarty of Integral Communications in leading a conversation about a gathering storm for the communications profession – the weaponization of content, often at scale through viral and/or paid distribution, to misinform people. The most prominent example of this is in elections, but the risks for business are big, and they’re coming.

Here are a few observations from the conversation I led, which included about 30 Page and Page Up members from around the world.

There is insufficient awareness of how much misinformation is already aimed at companies and brands. When asked how many had seen the 6-8 examples of brand attacks we showed, few raised their hands.

For most, it seemed to be an isolated occurrence and our need to worry about it is a way off. Yet the evidence suggests it’s happening now and is much more pervasive than most think.

We discussed how we have to get better at understanding the intent of such attacks and reviewed three sources of weaponized content:

Almost no companies have a system in place to monitor for weaponized content. While almost all have social listening activated all the time, it is not tuned to spot an attack rising from the dark web. Most companies seem to block access to the dark web for all of their employees, including the communications function, so they are blind to some of the origins of weaponized content.

The skills needed to tackle this new challenge include hiring more data scientists and identifying vendors who have access to the dark web (if your company does not) and have systems set up to create an early warning system.

Many thought that it initially will be hard to make the case for investment in this area as it seems like proving the absence of a negative — it hasn’t happened to our company yet so why invest? Yet many agreed that this could be a dangerous mindset given how much evidence suggests that weaponized content is going to be part of our daily experience soon.

Is this an issue that’s on your radar?